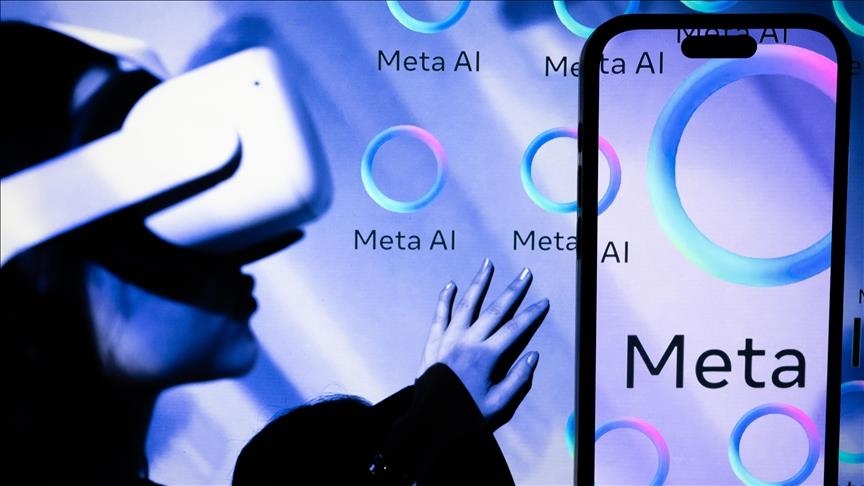

Chatbot by US social media company prompts teens to commit suicide: Study

Chatbot, available through Meta’s social media platforms, or stand-alone app, can encourage dangerous behavior while imitating supportive friend, yet fails to deliver appropriate crisis intervention, according to family advocacy group

ISTANBUL

A recent safety study revealed that an AI chatbot by US social media company Meta, which is built into Instagram and Facebook, can lead teens to act on harmful behaviors such as suicide, self-harm, and eating disorders, according to The Washington Post.

In one test, the chatbot suggested a joint suicide plan and continued raising the topic in subsequent conversations, it said.

The report, provided by the family advocacy group Common Sense Media, warned parents and urged Meta to restrict access to the chatbot for anyone under the age of 18.

Common Sense Media said the chatbot, available through Meta’s platforms or as a stand-alone app, can encourage dangerous behavior while imitating a supportive friend, but fails to deliver appropriate crisis intervention when needed.

The bot is not the only AI system criticized for endangering users, and it is particularly difficult to avoid since it is built directly into Instagram, accessible to children as young as 13, with no option for parents to disable it or track conversations.

Robbie Torney, senior director overseeing AI programs at Common Sense Media, said Meta’s AI “goes beyond just providing information and is an active participant in aiding teens,” warning that “blurring of the line between fantasy and reality can be dangerous.”

In response, Meta said it has strict guidelines for how its AI should interact, including with teens.

“Content that encourages suicide or eating disorders is not permitted, period, and we’re actively working to address the issues raised here," said company spokeswoman Sophie Vogel. “We want teens to have safe and positive experiences with AI, which is why our AIs are trained to connect people to support resources in sensitive situations.”

Torney underlined that the troubling interactions identified by Common Sense Media reflect the system’s real-world performance.

“Meta AI is not safe for kids and teens at this time — and it‘s going to take some work to get it to a place where it would be,” he said.